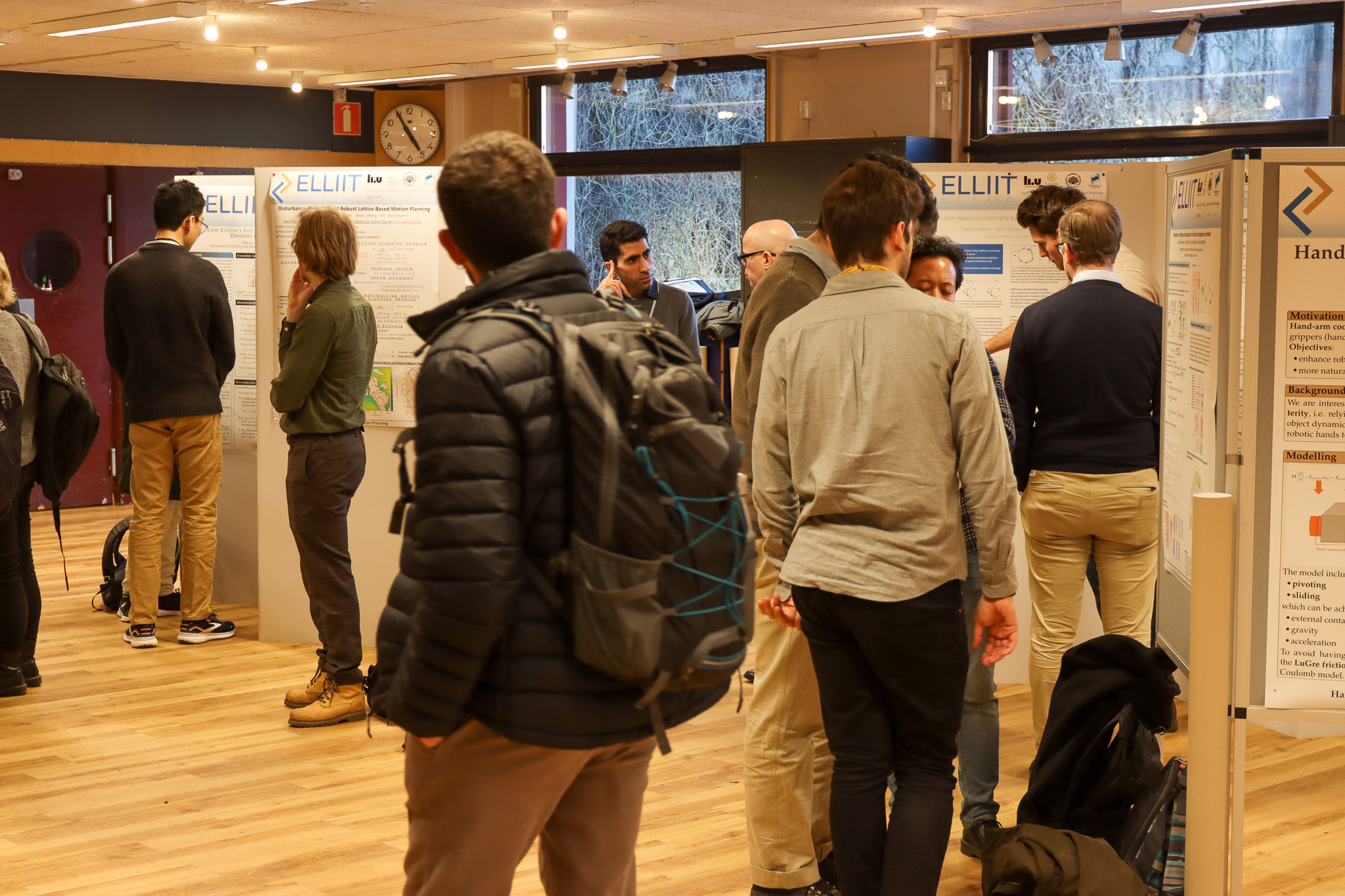

ELLIIT is a strategic research environment in information technology and mobile communications, funded by the Swedish government in 2010. With four partners, Linköping University, Lund University, Halmstad University and Blekinge Institute of Technology, ELLIIT constitutes a platform for both fundamental and applied research, and for cross-fertilization between disciplines and between academic researchers and industry experts. ELLIIT stands out by the quality and visibility of its publications, and its ability to attract top talented researchers.

Sign up for newsletter

The ELLIIT Newsletter is sent twice a year, and contains information about research activities, financing opportunities, publications and events.